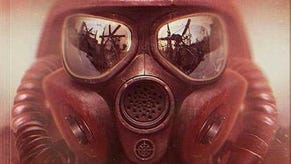

Tech Interview: Metro 2033

Oles Shishkovstov on engine development, platform strengths and 4A's design philosophy.

From the start we selected the most "difficult" platform to run on. A lot of decisions were made explicitly knowing the limits and quirks we'll face in the future. As for me personally, the PS3 GPU was the safe choice because I was involved in the early design stages of NV40 and it's like a homeland. Reading Sony's docs it was like, "Ha! They don't understand where those cycles are lost! They coded sub-optimal code-path in GCM for that thing!" And all of that kind of stuff.

But THQ was reluctant to take a risk with a new engine from a new studio on what was still perceived to be a very difficult platform to program for, especially when there was no business need to do it. As for now I think it was a wise decision to develop a PC and console version. It has allowed us to really focus on quality across the two platforms.

One thing to note is that we never ran Metro 2033 on PS3, we only architected for it. The studio has a lot of console gamers but not that many console developers, and Microsoft has put in a great effort to lower the entry barrier via their clearly superior tools, compilers, analysers, etc.

Overall, personally I think we both win. Our decision to architect for the "more difficult" platform paid off almost immediately. The whole game was ported to 360 in 19 working days. Although they weren't eight-hour days.

When your rendering engine employs deferred shading you have a lot more flexibility to do this. From the start you have several LDR buffers in different colour-spaces that are not yet shaded. That's only at the end of the pipeline (the shading itself) where you have the HDR output. And yes, at that point we split the HDR data into two 10-bit per-channel buffers, and then run post-processing on them producing the single 10-bit per channel image which is sent to display.

The PS3 uses the same approach except that buffers are 8-bit per channel. The buffers are full 720p by the way. The PC-side is slightly different: we don't split the output before post-processing, running everything in a single FP16 buffer.

The PC version has all of these techniques available (although we aren't sure yet what to allow in the final build). The 360 was running deferred rotated grid super-sampling for the last two years, but later we switched it to use AAA. That gave us back around 11MB of memory and dropped AA GPU load from a variable 2.5-3.0 ms to constant 1.4ms. The quality is quite comparable.

The AAA works slightly different from how you assume. It doesn't have explicit edge-detection. The closest explanation of the technique I can imagine would be that the shader internally doubles the resolution of the picture using pattern/shape detection (similar to morphological AA) and then scales it back to original resolution producing the anti-aliased version.

Because the window of pattern-detection is fixed and rather small in GPU implementation, the quality is slightly worse for near-vertical or near-horizontal edges than for example MLAA.

Yes, all the techniques can work together although the performance hit will be too much for the current generation of hardware.

Speaking from the Metro 2033 perspective, it was an easy choice! The player spends more than half of the game under the ground. That means deep dark tunnels and poorly-lit rooms (there are no electricity sources apart from the generators). From the engine perspective - to make it visually interesting, convincing and thrilling - we needed a huge amount of rather small local light sources. Deferred lighting is the perfect choice.

Their implementation seems to be badly optimised. Otherwise why do they have pre-calculated light-mapping? Why do they light dynamic stuff differently to the rest of the world with light-probe similar stuff?

From our experience you need at least 150 full-fledged light-sources per frame to have indoor environments look good and natural, and many more to highlight such things like eyes, etc. It seems they just missed that performance target.

That's natural extension of a "traditional" deferred rendering solution. Actually I don't know why others don't do that. While being deferred you first store some attributes (later used by shading) in several buffers, then you light the scene, and then shade it. So to do a mirror for example, all you need to do is just store mirrored attributes in the first pass and everything works as usual. We use such system a lot for water, mirrors and everything else which is reflective.

On PC we do even more interesting deferred stuff; we do deferred sub-scattering specifically tailored for human skin shading. But that's another story...