First look: Nvidia DLSS 3 - AI upscaling enters a new dimension

The new DLSS boosts performance by up to five times.

Two key PC technologies started to emerge towards the end of 2018 - hardware-accelerated ray tracing and machine learning based super-sampling. Forming the basis of Nvidia's brand change from GTX to RTX, the technologies have continued to be refined across the years. With the arrival of the new RTX 4000 graphics line, we have a new innovation in performance-boosting technology. DLSS 3 adds AI frame generation to its existing DLSS 2-based spatial upscaling. We've been putting the technology through its paces for the last ten days and we're impressed by the results.

Nvidia supplied us with a GeForce RTX 4090 ahead of time, along with incomplete preview builds of three DLSS 3-enabled titles: the path-traced Portal RTX, Marvel's Spider-Man and Cyberpunk 2077. The latter shouldn't be confused with the new RT Overdrive version and has more in common with the existing retail version, just with DLSS 3 added. Even running maxed, RTX 4090 and DLSS 3 allows these games to run nigh-on flawlessly on a 4K 120Hz screen. Nvidia is talking about DLSS 3 as an enabler for next generation experiences, showing its highly impressive Racer RTX, Portal RTX and the Overdrive RT version of Cyberpunk - which, believe it or not, is effectively a path-traced rendition of the game. Marvel's Spider-Man? Nvidia has shown a promotion video with RTX 4090 running the game at 200fps. Unfortunately, we are not able to show our own frame-rate numbers in this content - only performance multipliers.

At the nuts and bolts level, DLSS 3 is actually a suite of three different technologies Nvidia has spent years developing. It starts with the existing, highly successful DLSS 2 - currently our top pick for image-reconstruction based upscaling (though Intel XeSS and AMD FSR 2.x are getting closer). This is joined by DLSS frame generation. Essentially, the GPU renders two frames and then inserts a new frame between the two, generated via a mixture of game data such as motion vectors along with optical flow analysis, delivered by a revised fixed function block in the new Ada Lovelace architecture - which Nvidia says is three times faster than the last-gen Ampere.

Because frames are now being buffered, extra latency is added to the pipeline, which Nvidia seeks to mitigate with its lag-reduction technology, Reflex. At best, Reflex will nullify the extra lag caused by the extra buffering and perhaps even knock off further milliseconds. At worst, the game may have some extra latency added - we'll share some initial findings later on. There's nothing stopping you not using frame generation at all, and simply banking the lag reduction Reflex offers, if that's what you prefer. Because of the speed of the optical flow analyser in Ada Lovelace, prior Turing and Ampere cards cannot run DLSS frame generation. For owners for RTX 2000 and RTX 3000 series cards, this means that DLSS 3 supported titles still offer DLSS 2 upscaling and Reflex latency benefits, but frame generation is off the table.

In looking at how the buffering works for frame generation, I'm reminded of the old AFR (alternate frame rendering) techniques used with SLI - where two graphics cards worked in tandem rendering every other frame. This had a similar increase in latency, but without the mitigation of Reflex. So, in effect, DLSS frame generation on the same GPU is taking the place of the second graphics card from the SLI days. Still, the bottom line is that the likes of DLSS 2/FSR 2.x/XeSS speed up rendering and reduce latency - frame generation does not. The impact to lag in the test games we had is not an issue, but I don't think the technology is a good fit for ultra-fast esports titles where every millisecond of lag counts to the top players.

We also need to contend with the notion that the generated frames are not as 'perfect' as the traditionally rendered ones. Extremely fast motion - particularly close to the camera - may cause artefacts. Also, HUD elements have no motion vectors for the technology to track, which also has issues. In actual gameplay though, the problems are minimal. Acceleration is taking most games to 120fps or in excess of that, meaning per-frame persistence is very low. Meanwhile, remember those generated frames are sandwiched by 'perfect' traditionally rendered ones. In our video content, you'll see 120fps captures running at half-speed - even there, the visual discontinuities are hard to pick up. It's only really with prolonged eyeballing that you can tell where DLSS 3 frame generation has fallen short.

Even then, the results of the new technique - rendered in 3ms by the GPU - far exceed the best of the offline frame-rate upscalers out there. To put that to the test, we captured identical content from Marvel's Spider-Man using DLSS 3, stacked up against 60fps captures using Adobe After Effects' Pixel Motion technology and Topaz Video Enhance AI's Chronos SlowMo V3 model. The per-frame calculation cost there on a Ryzen 9 5950X backed by an RTX 3090 is 750ms and 125ms respectively. Because DLSS 3 is integrated into the game, with access to crucial engine data and backed by specific hardware acceleration on the silicon, it achieves superior results. It should go without saying that all of these techniques are superior to the 'motion smoothing' used in today's televisions - as they are limited to real-time frame interpolation, the results are inevitably poorer than the Adobe and Topaz shots shown here, where DLSS 3 is already providing improved results.

Improved performance is the point of the exercise - but also its application in enabling new experiences. Portal RTX is built on Nvidia's new RTX Remix platform, which looks like some kind of crazy science fiction dream. Essentially, Remix is integrated into older titles, allowing for fully path-traced renditions of classic PC games. In its keynote, we saw how Morrowind received a new RT look but we've actually been hands-on with Portal RTX - and it's a truly beautiful new way to look at the game.

We'll be talking about how path tracing integrates with Portal closer to its release, but in the meantime, in our testing it revealed the biggest performance increases of all. Path tracing is exceptionally heavy on the GPU, and the heavier the workload, the bigger the performance uplift provided - not only by DLSS 3 frame generation but by DLSS 2 upscaling too. The table below shows a 3.19x performance uplift from DLSS 2 on its own, which rises to 5.29x with the addition of frame generation. In the screenshot, you'll see a 'worst case scenario' I put together with water and two portals. Also note the latency numbers: in this case, Nvidia Reflex is indeed nullifying the extra lag introduced by frame generation buffering. It feels the same as the DLSS 2 version, which is in turn, far more responsive than native rendering.

| Portal RTX Test Chamber 14 | Perf Differential | Reflex Off | Reflex On |

|---|---|---|---|

| Native 4K | 100% | 129ms | 95ms |

| DLSS 2 Performance | 317% | 59ms | 53ms |

| DLSS 3 Frame Generation | 529% | - | 56ms |

Marvel's Spider-Man presents an altogether different challenge: even with a Core i9 12900K, today's GPUs can be easily bottlenecked by the CPU when the game's ray traced reflections are enabled. Looking at the screenshot directly below, you can see that this quicktime event only sees a 15.2 percent increase in frame-rate with DLSS 2. Bearing in mind that we're talking about a 1080p base image AI upscaled to 4K, we should be seeing far higher performance. What's actually happening here is that at native 4K, we're GPU constrained, while DLSS 2 sees us hitting the CPU limit.

Because DLSS 3 frame generation does not rely on the CPU preparing instructions for the frames it creates, the performance increase kicks in despite the CPU being fully tapped out. The whole process is completely indepedent of the processor. To see this in motion, check out Nvidia's promotion video, concentrating on city traversal - the most CPU-intensive part of the game. The vast majority of the action in that trailer will be CPU-constrained at around 100-120fps. DLSS 3 frame generation is effectively doubling the frame-rate.

For the table below, I tried to tax the GPU as much as possible - and weirdly, Peter Parker's visits to Feast HQ are far more impactful on graphics. Even so, with just a 36 percent boost to performance, we still hit the CPU limit. Frame generation continues to increase frame-rate, however. Also noteworthy here is that Reflex doesn't help latency much with DLSS 3 - the tech works by optimising the relationship between CPU and GPU, which is hard to achieve if the CPU is hitting its performance limit. Even so, the game is so fast that the latency figures are extremely low across the board.

| Marvel's Spider-Man Feast HQ | Perf Differential | Reflex Off | Reflex On |

|---|---|---|---|

| Native 4K | 100% | 39ms | 36ms |

| DLSS 2 Performance | 136% | 24ms | 23ms |

| DLSS 3 Frame Generation | 219% | - | 38ms |

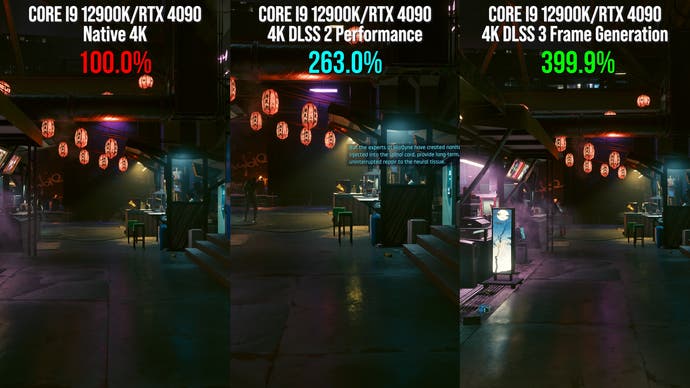

The final title provided for testing was a preview build of Cyberpunk 2077 from CD Projekt RED. In the video, there are two tests covering off traversal through the Cherry Blossom Market along with a longer drive through Night City and out into the desert. With setting ramped up at 4K resolution and full RT in place - up to and including the Psycho lighting setting - there's more evidence that the lower the base frame-rate, the bigger the performance multiplier.

In this case, frame-rates increase by up to a factor of four - again, transforming one of the most demanding PC video games into an experience that plays out beautifully on a 4K 120Hz display. In the video embedded at the top of the page, you'll see a fair amount of 4K 120fps capture slowed down to 50 percent speed to work in a 60fps video. You'll get an idea of the fluidity there.

In this pre-release preview code, Nvidia Reflex latency figures with DLSS 3 can't match DLSS 2 with Reflex off, which I expect to be the 'unofficial' target. Even so, the 12ms deficit recorded here is hardly going to be that detrimental to the experience of most triple-A fare, including Cyberpunk 2077. After all, this isn't a twitch shooter or an esports competitive experience - but with that said, we'll definitely need to see how latency fares in more DLSS 3 titles going forward.

| Cyberpunk 2077 Market | Perf Differential | Reflex Off | Reflex On |

|---|---|---|---|

| Native 4K | 100% | 108ms | 62ms |

| DLSS 2 Performance | 258% | 42ms | 31ms |

| DLSS 3 Frame Generation | 399% | - | 54ms |

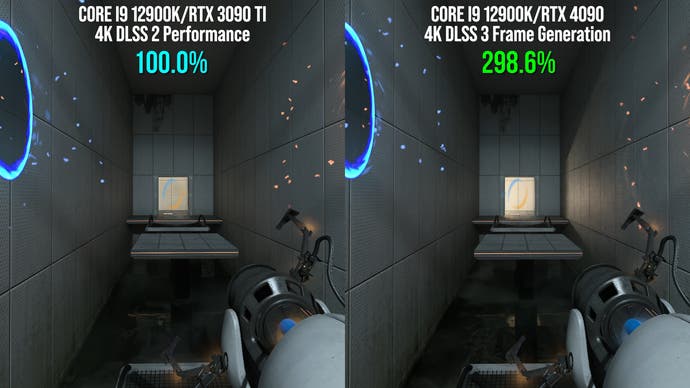

Wrapping up the testing, we have some limited data on how RTX 4090 shapes up in performance terms up against the last-gen Ampere architecture's silicon champion: the RTX 3090 Ti. Apart from not disclosing frame-rate numbers, the only other restriction Nvidia asked for was to limit gen-on-gen comparisons to DLSS 2 on the older card to DLSS 3 on the new. The rationale is that pure performance numbers should be held back for the review day embargo, where users can compare performance from numbers provided by the entirety of the PC press. While a limited DLSS 2 vs DLSS 3 comparison may not be completely ideal, I'd say that it does represent the likely use-case scenario of those cards.

Looking at Portal RTX first, the image there is from a static scene where I engineered the highest GPU load I could muster from Test Chamber 14. This has water in full view, along with two portals facing one another. DLSS 2 on Ampere vs DLSS 3 on Ada Lovelace essentially provides a three-times increase to performance overall. It is game-changing in that at the most basic level, a good experience on a 4K 60Hz variable refresh rate screen runs close to flawlessly on a 4K 120Hz display.

The same can be said of the preview build of Cyberpunk 2077 we played, where the performance multiplier gen-on-gen may not be as large as Portal RTX but the base frame-rate on the RTX 3090 Ti side is larger. Once again, it's the difference between a good 60Hz VRR experience on the older card up against a great 120Hz experience with RTX 4090.

| RTX 3090 Ti DLSS 2 | RTX 4090 DLSS 3 | |

|---|---|---|

| Portal RTX Stress Test | 100% | 291% |

| Cyberpunk 2077 Market | 100% | 247% |

Let's conclude the piece by getting down to brass tacks, tackling the obvious questions. First of all: does image quality from the AI generated frames hold up? This depends on the speed of the action and the ability of the DLSS 3 algorithm to track movement. The faster the movement, the less precise the generated frames - the Spider-Man running image in the zoomer block above is a particularly challenging example. Switch to full-screen view for each image and move between frames one, two and three. The discontinuities in the second AI generated frame are easy to see - but are they easy to see with each frame persisting for just 8.3 milliseconds? The answer is... not really. Also pay attention to how different Spider-Man's arms and legs are from frame to frame: it indicates how fast the motion is on these three image, across a total of 24.9ms game time.

Now look at the third-person Spider-Man image comparison to the left of it in the zoomer block. Again, switch to full image mode and cycle between the three frames, as captured across a total of 24.9ms. This represents something closer to normal motion within the game. In this scenario, the DLSS 3 generated frame is close to perfect, with only the yellow HUD element having issues. Played out on a 120Hz screen, this presents as a touch of flicker.

The next of the obvious questions: why isn't DLSS 3 frame generation available on RTX 2000 and 3000 cards? Nvidia says that the optical flow analyser in Ada Lovelace is three times faster than the Ampere equivalent, which would have profound implications on DLSS 3's 3ms generation cost. On a separate note, the analyser is a fixed function block that'll run just as fast on any RTX 4000 card. The only alternative for older cards I could imagine would be a lower quality version for older cards. One thing that Alex Battaglia and I noticed in image quality comparisons with Adobe's Pixel Motion and Topaz Video Enhance AI's Chronos SlowMo model is that playing out at 120fps at 8.3ms per frame, even poor-looking AI frames can pass muster played back in real-time.

Next up, let's tackle how frame generation overcomes the CPU limit. In Marvel's Spider-Man, our tests with the Core i9 12900K doubled performance and the game still felt somooth to play - even though the base frame-rate was entirely held back by the CPU. However, frame generation can also be called frame amplification. If the CPU isn't supplying good frame-times, stutter can be magnified too. For my own curiosity, I tried playing Marvel's Spider-Man with RT on a lowly Ryzen 3 3100 - a CPU that has no chance of supplying consistent frame-times. The frame-rate increased dramatically with frame generation, but the stutter was amplified too. There are great applications for DLSS 3 in overcoming CPU-limited games - like Microsoft Flight Simulator, for example - but good consistent frame-times from the CPU are still required.

Going into this testing, the plan was to cover DLSS 3 in broad strokes without spoiling too much of the full review. However, the work ended up being more comprehensive than we imagined. The thing is, we've still yet to scratch the surface of what DLSS 3 offers and how it should be tested.

In terms of unknowns we're still looking to test, there's the question of just how low the base frame-rate can be, post DLSS 2. For example, visual discontinuities in AI generated frames are hard to see when gaming at an amplified 120 frames per second, but what about 100fps? 90fps? 80fps? At the extreme level, could DLSS 3 actually work in making a 30fps game look like 60fps? Are there inherent weaknesses in the image interpolation that are common from game to game? This is pioneering stuff that we've never seen from a GPU before.

The longer term implications are interesting and it's with the Cyberpunk 2077's RT Overdrive upgrade that we see something potentially very exciting. This is a game transformed, with all lighting in the game achieved via ray tracing. In effect, it's a path-traced rendition of one of the most demanding PC games on the market. Consoles could never do this - it's way beyond their capabilities. By offering two different renderers, we're seeing the preservation of multi-platform development while at the same time offering a totally transformed next generation PC experience. It's an enticing thought and we'll be returning to DLSS 3 and Cyberpunk 2077 in future content.