The big interview: how Intel Alchemist GPUs and XeSS upscaling will change PC gaming

"I think the industry is looking forward to another player."

Last week, Intel finally laid down its cards. Architecture Day 2021 saw the company deliver an array of truly exciting new products, stretching across CPUs and graphics, from laptop to datacentre. The firm is looking to massively accelerate its compute performance by a factor of 1000x over several years. It's a seemingly impossible task, but Intel wants to achieve it by leveraging the state of the art in CPU, GPU and integration technology. A core part of the strategy is in delivering competitive graphics performance - and that's where the new line of discrete GPUs from Intel comes to the fore... and they're looking superb.

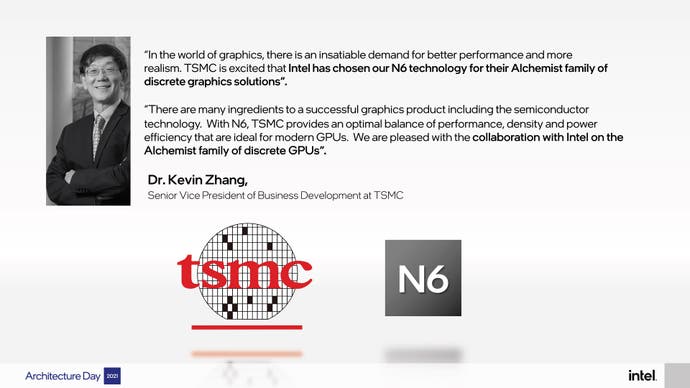

Codenamed Alchemist, the new GPU looks to take Intel's existing graphics tech - found in integrated form in its Tiger Lake and in limited release via the DG1 graphics card - and expand it out in all directions. More execution units (96 in DG1, up to 512 in DG2), more power, more memory bandwidth plus all the speed and efficiency advantages of TSMC's new 6nm fabrication process.

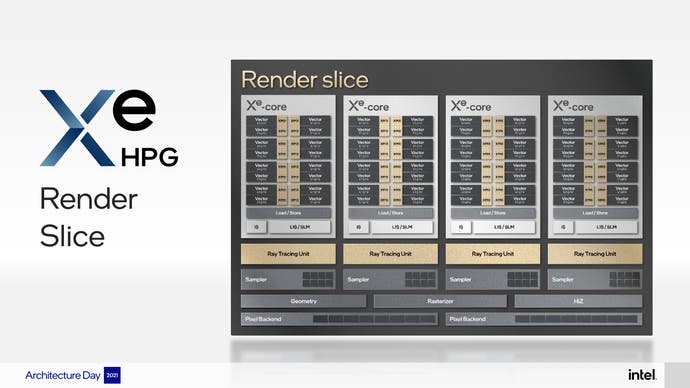

But over and above that, there are new features too. In fact, looking at a block layout of the Alchemist GPU, we seemingly have a philosophy much closer to Nvidia's products rather than AMD's. Where Team Red focused on rasterisation performance and memory bandwidth optimisations over hardware acceleration RT and machine learning features, Intel offers a balance much closer to the GeForce line, with significantly more silicon dedicated to those next-gen features.

But those features need to be used, of course, and that's why Intel's reveal of XeSS is so important. Based on the slides and information gleaned from Architecture Day (and this interview), Intel seems to be following the same approach as Nvidia with its own DLSS 2.x technology. However, the strategy in its deployment is very different - Intel wants to standardise machine learning-based super-sampling, it has no interest in making it proprietary in the way that Nvidia does with DLSS. XeSS will work on a range of GPUs from a range of vendors, which is precisely the right strategy to ensure that the technology becomes part and parcel of PC gaming.

And all of that lays the foundation for this interview, edited for clarity, where myself and #FriendAndColleague Alex Battaglia quizzed Intel vice president and head of graphics software Lisa Pearce along with engineering fellow Tom Petersen about what to expect from Alchemist, just how open and standardised XeSS will actually be, the work the firm has done with the software stack and how the future of graphics looks from Intel's perspective. And for the record, I've never worn a baseball cap...

Digital Foundry: Obviously, moving into the dedicated graphics arena is a really interesting development for Intel. And it's been a long time in development, you've made no secret of your move here, but what is the overall strategy? Why are you getting into the dedicated graphics space?

Lisa Pearce: If you look at it, it's an incredible market. Many of us have been working in integrated graphics for a long time. Intel's been in the graphics business for two decades, doing lower power, constrained form factor solutions - and the market's hungry, I think, for more options in discrete graphics. So, we're super excited to go into it. It's definitely one that has big aspirations with Xe, it's a scalable architecture, not just for client, but also datacentre. It's a very interesting time, and I think the industry is looking forward to another player there.

Digital Foundry: Absolutely. There has been a convergence in terms of the needs of the markets, right? Obviously, Nvidia made a huge impact in terms of AI, ray tracing and all of this can converge with the gaming market - and it's great to see that Intel is joining the fray as well. So we've had the Alchemist GPU revealed. And I'm going to ask the question that you don't want me to as - but where do you expect it to land in terms of the overall market? What is this GPU going to do for gamers? Which is to say, that's my question which doesn't involve the word 'performance' but is about performance!

Tom Petersen: Well, I would say of course, Richard, we're not going to talk about performance, so I can't directly answer your question. But I can say that as you walk through what is Alchemist, it is a full-featured gaming GPU first, right? There can be no question in your mind. And it's not an entry level GPU, it's definitely a competitive GPU. It's got all the features that are needed for next generation gaming. So, without talking about performance, I'm pretty excited about it.

Digital Foundry: Fantastic. And it looks like you're going to be first to the market with TSMC 6nm - interesting choice there. Can you actually quantify the advantages that this process might give you over what's already out on the market and what you'll be going up against in Q1 2022?

Tom Petersen: Well, again, that's getting a little bit too close to performance. But obviously, being in the next generation process ahead of other chips gives us an advantage. And there's plenty of experts out there that can give kind of technology roadmap projections, just not this one!

Digital Foundry: Looking at the architecture, we can see there's a focus on rasterisation, of course, but ray tracing and machine learning are front and centre, which is a kind of different strategy than we saw with AMD and RDNA 2. And it's more akin to what we see from Nvidia, focusing on machine learning and dedicated ray tracing cores, accelerating things like ray traversal, for example. Intel's going with that kind of approach, how come?

Tom Petersen: Well, to me, it's just the way that technology is evolving naturally. If you think about AI as being applied literally to every vertical application in the world, for sure, it's going to have a major impact on gaming. You can actually get better results than classic results by fusing AI-style compute to traditional rendering. And I think we're just at the beginning of that technology. Today, it's mostly working on post rendered pixels. But obviously, I mean, that's not the only place that AI is going to change gaming. So, I think that's just a natural evolution.

And again, when you think about ray tracing, it delivers a better result than traditional rendering techniques. It doesn't work everywhere and obviously, there's a performance trade-off. But it's just a great technique to improve the life of gamers. So, I think that these trends are going to continue to accelerate. And I think it's fantastic that there's some alignment actually, because that alignment between hardware vendors like Intel and Nvidia and even AMD... that alignment allows the ISVs to see a stable platform of features. And that grows the overall market for games and that's really what is good for everybody.

Digital Foundry: Just from an architectural standpoint, I'm curious. Why rely on dedicated hardware, for example, for the ray tracing unit, instead of repurposing current units that the GPU may already have, and relying on just general compute, for example?

Tom Petersen: The big reason there is because the nature of what's happening when you're doing ray traversal is very different. The algorithms are very different from a traditional multi-pixel per chunk, you know, multiple things in parallel. So think of it as you're trying to find where these rays are intersecting triangles. It's a branching style function, and you just need multiple different style of units. So that's really algorithmic, the preference would be, 'hey, let's run everything on shaders' but the truth is that's inefficient - although I do know that Nvidia did make a version of that available for their older GPUs. And you remember the performance difference between the dedicated hardware and the shader? It's dramatic. And it's because the algorithm is very, very different.

Digital Foundry: A question on overall strategy: you've shown one chip design, although there was a slide with two chips. I'm assuming the plan is going to be that you'll have a stack, right? You will have different parts, there won't be just one GPU coming up. Does the DG1 silicon still have a role to play, or was that almost like a test run as such?

Lisa Pearce: Yeah, DG1 was our first step to go and work through a lot of the different issues and trying to make sure we prepped our stack was the first entry there, getting the driver tuned and ready. So, DG1 was very fundamental for us. But really, Alchemist is the start of just great graphics performance GPUs. And there'll be many following, which is the reason why we shared some of those code names. It's definitely a multi-year approach.

Digital Foundry: Let's move on to something that's actually just as fundamental to the project as the silicon, which is the software stack. This is something where it looks like it's been like an ongoing approach. over many years, I guess I first started to take notice of Intel graphics more directly with Ice Lake [10th generation Core]. And obviously, you've come on leaps and bounds since then. But what is the strategy in developing the software stack? Where do you actually want to be when you end up launching in Q1 2022. Where are you now and what are the major accomplishments today?

Lisa Pearce: Well, you know, we've been preparing for Xe HPG for some time. And a big part of that is the driver stack having an architecture that can be scalable from integrated graphics with LP to HPG. And even as you talked about some of the other architectures with HPC, as well. And so, it's fundamental in the driver design, and it started last year. Last year at Architecture Day, we talked about Monza, it was a big change to our 3D driver stack to prepare for that scaling. So that's the first fundamental, then after that, trying to have the maturity in how we tune for different segments, different performance points and really squeeze out every aspect of each unique architecture product point.

So, within the driver, we've had four main efforts going on, especially this year preparing for this launch. You know, the first three are more general than that is specific to HPG particularly So, trying to have local memory optimisations, how well do we use it? What's our memory footprint? Are we putting the right things in local memory for each title? then the game load time performance... the load time there was about 25 percent average reduction this year. Some are much heavier. The work is continuing. So, we have a lot of work to do there continuing into Q1 for launch. Third was CPU utilisation, CPU bound titles, you know, I mentioned on average, we kind of stated things conservatively and 18 percent on average, [but] some titles [have] 80 percent reduction. So that was really the maturity of the Monza stack that we rolled out and trying to squeeze the performance from that. And then the last one, of course, how well does the driver feed the HPG, the larger architecture. And so all of these are continuing. It's a constant watch and tuning as new games, new workloads, especially on DX11/DX12. And you'll see that continue through Q1 for the launch.

Digital Foundry: Okay, because perception was that when we moved into the era of the low-level APIs, the actual driver optimisation from the vendor side would kind of take a backseat to what's happening with the developer. But that hasn't happened right?

Tom Petersen: No, it doesn't work that way. I mean, the low level APIs have given a lot more freedom to ISVs and they've created a lot of really cool technologies. But at the end of the day, a heavy lift is still required by the driver, the compiler alone is just a major contributor to overall frame performances. And that's going to continue to be something that we're going to work on, for sure.

Digital Foundry: Not just looking at the latest titles and the latest APIs, does Intel have any plans to increase its performance and compatibility with legacy titles in the early DX11 to pre-DX11 era, perchance?

Lisa Pearce: A lot of [driver optimisation] is based on popularity, more than anything, so we try to make sure that top titles people are using, those are the highest priority, of course... some heavy DX11 but also DX9 titles. Also, based on geographies, it's a different makeup of the most popular titles and APIs that are used - so it is general. But of course, the priority tends to fall heaviest with some of the newer modern APIs, but we still do have even DX9 optimisations going on.

Tom Petersen: There's a whole class of things that you can do to applications. Think of it as like outside the application, things that you can do like, 'Hey, you made the compiler faster, you can make the driver faster.' But what else can you do? There are some really cool things that you can be doing graphically, even treating the game as sort of a black box. And I think of all of that stuff as implicit. It's things that are happening without game developer integration. But there's a lot more stuff that you can do when you start talking about game integration. So I feel like Intel is at that place where we're on both sides, we have some things that we're doing implicit, and a lot of things that are explicit.

Digital Foundry: Okay. In terms of implicit driver functions, for example, does Intel plan to offer more driver features when its HPG line does eventually come out? Things like half refresh rate v-sync, controllable MSAA, VRS over-shading, for example, because I know Alchemist does support hardware VRS and it can use over-shading for VR titles. Are there any sort of very specific interesting things that we should expect the HPG launch?

Tom Petersen: There are a lot of interesting features. I think of it as the goodness beyond just being a great graphics driver, right? You need to be a great performance driver, and competitive on a perf per watt and actually perf per transistor, you need to have all that. But then you also need to be pushing forward on the features beyond the basic graphic driver and you'll hear more about that as we get closer to HPG launch. So I say yes, I'm pretty confident.

Digital Foundry: Okay, so I want to move on to XeSS. We've got a demo, showing it in action. It's hugely exciting, because, we've been massive advocates of machine learning and the applications of machine learning. And it's absolutely fantastic to see a proper competitor to DLSS entering the market that would possibly run across more hardware, which I think is key to its take-up. So, the first thing I want to ask you is what was the drive to make an image reconstruction technique in general from the Intel perspective and why make it driven by machine learning processes?

Tom Petersen: Well, I would say there's a continuum of performance and quality. If you think about it, you render at low res and you can get high frame-rate, or if you render at high res, you tend to get low frame-rate. And the question is, can we do better than that? And the answer turns out to be, yeah, if you start thinking about novel ways to re-use information from prior frames, or from prior histories of games, you can take in all that other information and use it to reconstruct a better frame - and that's really what's happening with all of these AI based, super-rendering or super-sampling techniques.

And at the end of the day, it's hard work - it is don't get me wrong - it's rocket science, and we have some of the best AI people in the world working on it. But the results speak for themselves, you can actually get a better result by either interpolating, or integrating information across multiple frames. And then adding on to that information that can be trained into a neural network from looking at millions or hundreds of millions of frames from other games. It's really just a spectacular technology.

Digital Foundry: So it seems the way you're describing it there that there is a large training process in the background, perhaps based on highly super-sampled images of certain games. And then the inference based upon the weights generated from that are done in real time on the GPU.

Tom Petersen: Yeah, of course. And we have, of course, multiple flavours of that inference. But the cool part is that they're generic in the sense that they're not trained on a specific title. The inference works across multiple games, because at the end of the day, they're all very, very similar. And I think of it as, it's almost the best of the world where you can kind of say, take this engine, train it on a bunch of data that's from different games and then use that across multiple different titles to get great results.

Digital Foundry: Okay, talking about the different inferences and the paths you mentioned there, your presentation specifically mentioned the XMX path as well as the DP4A path. Could you perhaps go into more detail about which ones are specific to the Intel architecture and which ones aren't, as well as, perhaps differences in performance and perceptual image quality that each one might have on Intel architecture?

Tom Petersen: So, the truth is that people confuse all these rendering techniques, and post render techniques, and they kind of blend them all together into 'imagery gets better'. But there are some really different things going on. In general, there's something that I think of as upscaling or sometimes people call it 'super resolution'. And what you're doing there is you're taking a low resolution image from a single frame, and you're kind of blowing it up using multiple different techniques. And that is a very high performance technique that gives you an okay result in many cases, but it doesn't have all the information available to it, it doesn't know about prior rendered frames and doesn't know about motion vectors. And it doesn't really know about the history of all frames that ever been generated.

So if you compare that upscaling technique, or upsampling - I think upscaling is a better word - if you compare that technology to what's happening with something like XeSS, in XeSS, we're taking multiple frames of the game. And we're looking at motion vectors and we're also looking at prior rendered frames that have been trained into a network. So effectively, we're looking at a lot more information to generate that new frame, that has a better characteristic than traditional upscaling.

Now, when you think about how do you run that XeSS algorithm? The initial ones we've talked about are the XMX engines, which are systolic that's kind of the traditional method of doing fast inference on a GPU. And the other method is DP4A, which is another kind of simpler form that can be more broadly adopted across multiple different architectures. So I think of it as, on hardware platforms from Intel that support the core engine, we expect to make XeSS available on that device. So that's, that's pretty cool, right? You kind of say, we have multiple backends that all plug in underneath a common API. And that is, to me, the most important thing is that ISVs are looking for these common APIs. So they can do one integration.

And then underneath that integration, you could have multiple implementations of the engine without ISVs having to re-integrate and re-evaluate every time so our expectation is that that's exactly what XeSS is - it has a standardised API that could even work across multiple vendors. And so, part of the key strategy of XeSS is to be open, let's get these API's out there. And let's let other people implement underneath these so that we can make the life of ISVs a little bit easier. And over time, the hope is that this kind of stuff will of course, move up higher into cross industry, standardized APIs, but all that stuff takes time. So what we're kind of thinking is, hey, let's get our first version out there, make it awesome, then publish it, make the APIs open, and then over time, it gets standardised.

Digital Foundry: So as a part of that, you set your own SDK - your own API - out there that may eventually trickle down, or trickle upwards into something more broadly standardized.. is the first iteration actually using Microsoft DirectML at all as part of it?

Tom Petersen: No. Now, there's a good question about that: why not? The truth is we have our own internal programming language that we're using for the high performance kernels that are part of the implementation of XeSS. And all that stuff right now is very Intel 'in kitchen' kind of super optimised fused kernels, like beautiful, you know, almost like a dude.. coding in assembly from back in the day. I can imagine like Richard with a tiny little ball cap doing that. We have like a whole room of those people making XeSS just perfect. So that's where we are today. But over time as say, Microsoft's APIs for shaders extend, maybe this whole thing can just become shader based, but it's not. [Right now] traditional shaders are not optimised for the XMX style architecture.

Digital Foundry: Yeah, just for the record, I checked out [of assembly coding] with 6502, that's how old I am! I guess a more nuts and bolts question from your demo: you were showing 1080p scaled via XeSS to 4K. Will you be supporting different internal resolutions?

Tom Petersen: Yeah, I think you'll see XeSS will support multiple different configurations. There's like a quality mode or performance mode, and different input resolutions to different output resolutions. I'm not sure what that cross matrix is going to look like right now. But there's no need for this to be just 'one in, one out'.

Digital Foundry: And on the SDK side of things, I mean, all of these features live and die by actually getting implemented in titles, right? So how open is open? Are we talking about source code on GitHub, or something more akin to what Nvidia did with the DLSS SDK?

Tom Petersen: So the way to think about it is that it's definitely in everybody's interest to have open ISV API's. And what that means is, literally the same API, everybody integrates that and underneath it, you plug in sort of different DLLs that implement the engines, basically, that are implementing the features. That's going to take some time, right? So in the short term, what we'll probably be doing, and I think there's still a little bit of motion here is to publish the APIs, publish the SDKs, and publish references and then effectively ISVs will know what they're getting. And there's really nothing about that that feels awkward to me. over time, you'd like to be even more open, where there's these APIs that people can plug in underneath them. And that's effectively the strategy. Now, we'll still very likely have our own internal engines that plug underneath these APIs. And over time, whether those are going to be open or not, it's not clear.

Digital Foundry: Okay, so is there actually a sort of limitation on which GPUs from other vendors will run it? I mean, I'm assuming there has to be some kind of machine learning acceleration involved, right?

Tom Petersen: That's, that's really a question for other vendors, right? You've seen these machine learning style applications run on GPUs with none, right? There's no reason it has to have a particular hardware. It's just a performance, quality kind of complexity trade off.

Digital Foundry: Here's an interesting thought that I had during the Architecture Day, which is that essentially, you have machine learning silicon not just in the GPU, but also in the CPU. Let's say, I own an older GeForce or Radeon card, and I want to tap into XeSS. Can I do that via the CPU?

Tom Petersen: Well, you know that there's an integrated GPU on most of our CPUs. And so, the question is, what would it look like? And I'm sure, you're aware of like how hybrid works for most notebooks where there's a discrete GPU render, and then there's a copy to an integrated GPU, that today does nothing other than act as a display controller really. But now that we have technologies that are really cool, could we do something interesting on the GPU? I think that entire space, we called it Deep Link. And what happens in terms of Deep Link right now, we're still learning so much here. And there are so many opportunities. Today, it's just Intel products working together, but you can think about Deep Link as just like, what can we do in a two GPU environment or a CPU/GPU environment that's better than otherwise? So, I don't want to answer that question directly, but let's just say there's lots of opportunity in that space.

Digital Foundry: From an Intel point of view, if you're able to address machine learning silicon, does it fundamentally matter if it's on the CPU or GPU? That's kind of the question I've been pondering.

Tom Petersen: It's the performance, you know, it's the perf per watt... is the compute in the right place to affect the pixels that are moving through the pipeline. There's no religion about it. It's just like, where does the science lead us to? Do we have a feature that's going deliver benefits to customers? And if we do, yeah, we'll do it probably. I mean, there will be no hesitation if we find a great technology that makes our CPUs deliver a better experience than somebody else's.

Digital Foundry: Just another quick question here regarding the ray tracing setup on this GPU architecture. It does have a dedicated ray tracing block that accelerates multiple things. It looks like it is outside the main core area so can it run concurrently with the normal vector engines or XMX engines to further increase utilisation, saturation or just GPU parallelism working the most at the same time.

Tom Petersen: Unfortunately, I don't know the answer to that. I think yes, but I wouldn't want to double check on that. Okay, that's an excellent question, though. Stump the host!

Digital Foundry: A lot of fantastic technologies that were revealed and talked about at the Architecture Day. And obviously, at the end, there was the moonshot, Ponte Vecchio. This is a completely different area to what we're talking about in terms of mainstream consumer graphics. However, principles wise, you were showing scalability on a multi-chip level, right? You were bringing multiple GPUs together. And from the looks of it in terms of how they're linked, they seem to act as one coherent whole. Now, obviously, in the gaming space, the concept of bringing together multiple GPUs together and to accelerate performance died with SLI, it didn't really scale to modern architectures, to temporal applications, specifically. My question here is good looking to the future, could Ponte Vecchio-style technology scale down to the consumer level?

Tom Petersen: Well, again, I don't want to talk about unreleased products, but let's back up to Ponte Vecchio. The target of Ponte Vecchio is compute, right? And compute scales very easily, very naturally. And having multiple compute die across a giant workload is just a very straightforward scaling process. There's not a heavy software mapping thing that has to happen. It's very much the way the problem is defined with super computer loads. That's very different on consumer. I guess I'll give you my sense from the outside about what's made SLI difficult - it is the multi frame nature of SLI, where you're kind of trying to say, AFR [alternate frame rendering] is the technology they use and the idea is they render one frame in each GPU that's temporarily separated, and then they're just going to display them in [sequence]. That technique breaks down with modern titles, because of post-processing and frame-to-frame cross communication.

So, you know, to do it on consumer, we would need a new technology, a new way of partitioning work across multiple tiles. And the degree to which there's high bandwidth communication across that tile, you can kind of ignore the fact that they are multiple tiles. Like if they had infinite bandwidth between tiles, they just look like a big piece of silicon, and there's no software-visible behaviour. Now, there's not going to be infinite bandwidth, so there's going to be some kind of work to do that scaling across tiles. But I think that's the trend. I mean, if you just look at how silicon works and you look at how yield works, having multiple smaller die over time is probably a very good idea - and that would need to be made to work somehow. It's not going to be anything like SLI. SLI is a technology that worked great with the DX9 and DX11. It's going to be something different, I think.

Digital Foundry: This question is a bit more global and it is essentially about relationships with developers, because this is just as fundamental to getting good performance as the silicon and the driver... you actually have be there with the developer to help them to optimise for specific architectures. What is Intel's vision there? How are you deploying that sort of idea?

Lisa Pearce: We've had a deep engagement with the gaming ISVs for a long time across Intel, right. But now, it's at a point of a much deeper engineering engagement. And it's been building for the last two years, we know it's critical table stakes for success and high performance consumer graphics. So, it's been building stronger and stronger [relationships], giving more capable tools, more capable SDKs and bringing them along to help make sure that we have the best possible experience for gaming on Alchemist. And in the next few years, we'd expect, you know, ultimately, far more than day zero driver alignment: up front tuning, upfront engagement, maybe some unique optimisations that we can go and drive in even before it's in the final stage for launch. So, we view those relationships as absolutely critical to the future of discrete graphics.

Digital Foundry: There's also been a drive to increase functionality adjacent to gaming - not specific to gaming. For example, streaming. How are things going there and what are the plans for the future?

Lisa Pearce: You know, in streaming, this is one of the cases where we really see Deep Link as an interesting technology for us to continue to improve on. It's always great when we have integrated and discrete graphics on the system. Capture and streaming is one of them - our encoders have been a strong point for some time. How do we make sure we leverage the balance of high performance, what quality levels we want... there'll be a lot of distinct solutions we want to bring there. And we'll see more about that with the Alchemist launch.

Digital Foundry: I have a little bonus question regarding the difference between the HPG and HPC setup, I noticed that the EU is quite a bit wider, like two times as wide in the HPC arena. What is the design decision for that and not to use that in the high performance graphics set-up?

Tom Petersen: Well, it's all about the segment that they're targeting, obviously. There's some parallelism that's more prevalent in the workloads at different segments. And I would attribute most of the architectural difference to the workloads that the architectures tune for.

Digital Foundry: For my one remaining question... returning to machine learning. Basically, it's the new frontier, right? This is where the possibilities are endless. But, you know, what is the next possibility? Obviously, super-sampling is the big one at the moment. Do you have any thoughts on where things are going to go next in the gaming arena?

Tom Petersen: I have a million thoughts! But I don't want to talk about them Richard! But I can tell you a few things, because, you know, it's just straightforward to me right now. We're working on post rendered pixels, and post render pixels, [so] you've left a lot of the information already earlier in the pipeline. And so the question is, is this idea of fusing more information from deeper back or maybe even start taking to look at physics engines... and what about all of the other engines that are feeding into the render, like projection and geometry expansion? So, there are just many, many, many algorithms that are running that are prior to pixels and all of those are candidates for feeding into some kind of generative algorithm, which is really what AI is all about. AI does two things. One is extrapolation where it says 'there's information here, I'm just going to move it forward in some kind of reasonable way'. But it also does hallucination, where you kind of say, I've seen things in the past that are like this. So wouldn't it be great if there was a tree here? You know, this is what AI does and all of that stuff is perfect for games. And there are many, many different applications...