DirectX @ ETC 2000

What's Microsoft up to now?

Taking place in Sheffield last Wednesday, ETC 2000 was a conference organised by publishing giant Infogrames to give some of the thousands of game developers working for them a chance to hear about the latest technology from hardware manufacturers like NVIDIA and AMD, as well as getting the low-down on DirectX from Microsoft.

Unfortunately (for us anyway) a lot of what the hardware companies had to say was highly technical, and obviously aimed at the developers - NVIDIA's talk on how to optimise the use of vertex buffers to make the most of hardware T&L acceleration springs to mind.

But Microsoft's various presentations during the day gave an interesting insight into the potential of DirectX 7.0, as well as some hints of what will come in the next version...

State Of The Art

To kick things off, DirectX developer relations man Tony Cox gave us a look at the current state of the art when it comes to 3D graphics, picking out hardware geometry acceleration (as seen on NVIDIA's GeForce cards) as the most interesting new feature.

The first demonstration though was a standard particle fountain demo to show the raw power available to developers today, with a whole spray of little coloured particles erupting from a point source, all using alpha blending to simulate motion blur. Both the GeForce 256 and Playstation 2 have used similar demos in the past, but it's still impressive when you see it in action.

"When you have an explosion, I want it to look like this", Tony joked as he set the demo going. "Lots of cool pyrotechnics."

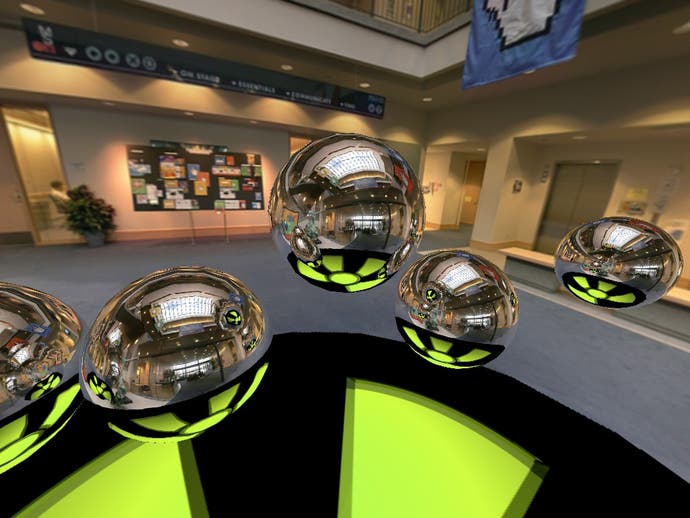

Cubic environment mapping is another GeForce feature now supported in DirectX, and the next demo used this to generate reflections on the metal balls in a "Newton's cradle". Not only could you see the room reflected in the balls, you could also see the balls reflecting each other, and of course the reflections on them...

Next up was a demo of "skinning", animating a mesh in real time based on the movement of "bones" beneath the surface. But it was the final water demo that caught the most attention. Even Tony was taken by it, commenting that "I'm going to leave it running for a few minutes, because it's just so great."

The demo featured a pool of water in a pre-rendered room (actually made up of photographs of a Microsoft office), which rippled, reflected and refracted in real time. The whole thing looked totally realistic .. apart from the fact that the reflections were set up wrong, so they were actually 90 degrees out of synch with the room.

"It would look a lot cooler if I'd made the files correctly", Tony admitted as he realised the mistake. "Oops."

Future Gaming

Obviously these demos were far from being real in-game applications, but they do show the kind of thing that modern hardware and Direct3D is capable of. And as hardware capabilities increase even more over the next year, we might well see games reaching this level of visual quality.

Imagine running through a pool of water in Quake 3 Arena and seeing the surface realistically ripple around you, with yourself and the room around you reflected in it. As the ripples spread (all made up of hundreds of tiny triangles, their movements calculated on the fly based on realistic physics), you can see the surface shimmering, the reflections warping, and your vision of whatever is beneath the surface refracting just like it would in real life.

Or how about replacing the sprite and polygon explosions that most games use at the moment with a massive spray of glowing particles, all generated in real time, and each lighting the surrounding architecture realistically. Sounds crazy? Maybe, but within the next couple of years we may start to see this kind of level of detail appearing in real games.

In the more immediate future we should see more realistic reflections on shiny surfaces, more detailed models and environments, and better weapon and spell effects in games. And that's just scratching the surface...

DirectMusic

Next up was a presentation by Brian Schmidt and Chanel Summers, taking a look at DirectMusic.

"We're starting to reach that next level of cinematic music", announced Brian. "Rinky-dinky video game music won't do anymore."

DirectMusic uses a similar format to MIDI files but allows you to add in your own sounds using DLS ("DownLoadable Samples"), as well as supplying Roland's GS sound set (with 226 instruments and nine drumkits) as standard. This means that music should always sound the same on any system, whereas MIDI always suffered because you could never be quite sure what the limited number of standard sounds would be like on different soundcards.

More importantly for gamers though, the DirectMusic API is also designed to "render" music in real time, producing interactive music that adapts to what is happening within a game, rather than just being linear and repetitive like a CD track or wave file.

As Brian pointed out, "Music, no matter how good it is, can get .. what's a polite word? Boring" when you repeat it over and over again.

The idea is that musicians set up a series of variations, different tempos and intensities, seperate themes and motifs for individual characters and locations, and then DirectMusic puts it all together as the game runs to produce a unique soundtrack that fits what you see on the screen.

In Sanity

This was demonstrated using music from Monolith's forthcoming game, Sanity, running on a DirectMusic player which was also developed by Monolith.

Chanel tinkered with the different settings to show what effect it had on the music, and the result was pretty impressive. As the "intensity" increased the music went from ambient background music with sections of total silence to a more driving dance style. Changes were mostly done smoothly, even when the music went from high to low intensity rapidly.

"Motifs" could also be introduced, to represent the appearance of new characters or items, something which is often done in movie soundtracks. These can then be fitted to the right tempo and key by DirectMusic to make sure that it all blends in seamlessly with the music that is currently playing.

One of the Sanity demos used "bayou style" music, complete with accoustic and slide guitar samples thanks to DLS. The sound quality was excellent, and again the music varied from low intensity background musak to faster guitar driven sections for battle scenes.

"Pre-rendered music is fine for pre-rendered video, but for real time games you should have real time music", was Brian's final sales pitch.

Sound FX

But it's not just music that can take advantage of DirectMusic - Brian also suggested that it could be used to create non-repetitive ambient sounds.

The most obvious example would be improving the crowd noise in sports games, making the level of cheering from each team's fans change dynamically depending on what was happening in the game, all blended together seamlessly in real time.

Natural sounds such as sea and wind noises and bird song are another obvious application for DirectMusic, replacing looping wave files with more random sequences that can be varied depending on the player's location and what they are doing there.

DirectMusic also includes reverb effects, and Microsoft will be adding environmental reverb, flange, chorus and compression to the next version of DirectMusic, which should make for some interesting sound effects in future games.

In his speech later in the day, Infogrames' founder Bruno Bonnell said that sound accounts for "30 to 40% of the emotion" in games and, if this demonstration of DirectMusic was anything to go by, the level of emotion that can be added by sound and music should increase rapidly over the next year or two as developers begin to replace linear audio tracks with dynamic music.

DirectPlay

The third and final presentation by Microsoft was to show off DirectPlay's new voice capabilities. Last summer Microsoft bought out Shadow Factor, the company behind Battlefield Communicator, and this technology is now being built into DirectPlay as standard.

DirectPlay Voice will support a number of different configurations - peer to peer (where each player sends voice data to all the other players), multicast (where all the data goes to a dedicated server which sends it on to the appropriate players), and mixing server (where the dedicated server mixes all the sounds for a player into a single stream).

Peer to peer will work best for small games as, in the worst case scenario where everybody is talking at once, each player will be sending and receiving one stream for every other player in the game. This soon adds up, especially if you are on an analogue modem connection.

Multicast requires a dedicated server to collect and transmit the voice data, but it does mean that each player is only sending one audio stream, although they are still receiving one from every other player who is talking.

The mixing server requires a more powerful dedicated server to combine the sound streams for every player in the game, which in a massively multiplayer title or a large team game could mean a lot of horsepower. On the bright side, it does mean that each player is now only sending and receiving a single audio stream, and their own computers don't need to spend any CPU time mixing the audio streams.

The beauty of DirectPlay Voice though is that you can have a seperate server just to handle the audio mixing and transmission - you don't need to take any CPU time away from running the rest of the game.

Doh!

Microsoft were going to demonstrate DirectPlay using a pair of PCs networked together, running a retro-fitted version of Age of Empires II with voice communication support. But unfortunately they had apparently forgotten that the headsets they were using would prevent the sound from being outputted to the speaker system for the rest of us to hear.

Whoops.

Had the demonstration worked, we would have seen that DirectPlay supports a range of quality levels depending on how much bandwidth you have to spare on voice data, from 1.2 to 6.4 kbps per audio stream. Obviously this isn't going to be any use for playing music over, but for voice communication in games it should be perfectly adequate.

There is also built-in support for DirectSound 3D, allowing positional audio effects to be used. So, if you are playing a first person shooter for example and the person talking to you walks behind you, the sound of their voice should track their movements.

And "adaptive queueing" means that if you have limited bandwidth the more important game data will always take priority over voice communications, so talking should never lag out your game. There are also a number of different ways of turning voice comms on and off, including voice activation (the game only broadcasts when you are talking loudly enough) and "push to activate" (where you hold down a button while you talk).

All of this should work on any Full Duplex soundcard (ie pretty much any soundcard made in the last few years), and as the amount of bandwidth available to gamers increases, the possible applications of voice communications are spreading rapidly...

Conclusion

It's only a few years since Microsoft released the first version of DirectX, a rather poor API that most game developers avoided like the plague. But since then Microsoft have been talking to the developers to find out what they want from the API, and by 1998 it was actually becoming quite useful.

We're currently up to DirectX 7.0, and new features like voice communications, hardware geometry acceleration support, and DirectMusic's real time sound rendering capabilities have the potential to improve the quality of the new generation of 3D games.

The next version (although the speakers mostly avoided calling it DirectX 8.0 for some reason) should be in beta by the European Meltdown event in June, with a public release by the end of this summer. And judging from what we saw at ETC 2000, PC gamers are going to be in for a real treat...

-

Bruno Bonnell's ETC 2000 speech

Creative Labs Annihilator Pro review