Digital Foundry vs console texture filtering

Updated with detailed developer feedback.

UPDATE 26/10/15 16:57: After publishing this feature over the weekend, Krzysztof Narkowicz, lead enginer programmer of Shadow Warrior developer Flying Wild Hog, got in touch to discuss thoughts on console anisotropic filtering and agreed that we could publish our discussion on the record. Suffice to say that the picture he paints of the texture filtering levels on console is somewhat different to the conclusions we reached in last weekend's article. We hope that the information adds something to the discussion but more than that, hopefully it addresses the vast majority of the outstanding questions surrounding the issue. And we begin with this - what's the impact of anisotropic filtering on console performance?

"It's hard to quantify the performance impact of AF on consoles, without using a devkit. Additionally, AF impact heavily depends on the specific game and a specific scene. Basically it's an apples to oranges comparison," he says.

"Different hardware has different ALU:Tex ratios, bandwidth etc. This means also different bottlenecks and different AF impact on the frame-rate. AF impact on the frame-rate also depends on the specific scene (what percentage of screen is filled with surfaces at oblique viewing angles, are those surfaces using displacement mapping, what's the main bottleneck there?) and the specific game (what percentage of the frame is spend on rendering geometry and which textures use AF)."

There's also a key difference between PC and console, an extra level of flexibility on how anisotropic filtering can be deployed - something we've seen in titles like Project Cars, The Order: 1886 and Uncharted: the Nathan Drake Collection.

"On PC (DX9, DX11, DX12) you can only set the max AF level (0, x2-x16) and the rest of the parameters (eg AF threshold/bias) are magically set by the driver. For sure AF is not free. AF requires multiple taps and has to sample a lower mipmap (very bad for texture cache), so it's a pretty heavy feature."

Narkowicz also has a very straightforward explanation for varying levels of anisotropic filtering on consoles:

"It's not about unified memory and for sure it's not about AI or gameplay influencing AF performance. It's about different trade-offs. Simply, when you release a console game you want to target exactly 30 or 60fps and tweak all the features to get best quality/performance ratio. The difference between x8 and x16 AF is impossible to spot in a normal gameplay when the camera moves dynamically," he says.

"AF x4 usually gives the best bang for the buck - you get quite good texture filtering and save some time for other features. In some cases (eg when you port a game without changing content) at the end of the project you may have some extra GPU time. This time can be used for bumping AF as it's a simple change at the end of the project, when content is locked. Applying different levels of AF on different surfaces is a pretty standard approach. Again it's about best bang for the buck and using extra time for other more important features (eg rock-solid 30fps)."

Narkowicz also has some thoughts on why we sometimes see different levels of texture filtering between the consoles in multi-platform products.

"Microsoft and Sony SDKs are a bit different worlds. Microsoft is focused on being more developer-friendly and they use familiar APIs like DX11 or DX12. Sony is more about pedal to the metal. At the beginning of the console generation when documentation is a bit lacking it's easier to make mistakes using an unfamiliar API. Of course this won't be a case anymore, as now developers have more experience and a ton of great documentation."

Original story: There's an elephant in the room. Over the course of this console generation, a certain damper has been placed on the visual impact of many PS4 and Xbox One games. While we live in an era of full 1080p resolution titles, with incredible levels of detail layered into each release, the fact is that one basic graphics setting is being often neglected - texture filtering. Whether it's Grand Theft Auto 5 or Metal Gear Solid 5, both consoles often fall short of even PC's moderate filtering settings, producing a far muddier presentation of the world - with textures across the game that look, for lack of a better word, blurred and fail to match the high expectations we had going into this generation.

Worse still, Face-Off comparisons show PS4 taking the brunt of the hit. While many PS4 titles offer a superior resolution and frame-rate to Xbox One by and large, recent games like Dishonored: Definitive Edition still show Sony's console failing to match its console rival in this filtering aspect. It's a common bugbear, and the effect of weak filtering on the final image can be palpable, blemishing the developer's art by smudging details close to the character. Some studios react post-launch. Ninja Theory patched higher-grade 'anisotropic filtering' in to its Devil May Cry reboot on PS4 to give much clearer texture details on floors, while a similar improvement was seen in a Dying Light update. But two years into this generation, it's fair to say the trend isn't relenting, and new games like Tony Hawk's Pro Skater 5 show it's still common foot-fault for developers, regardless of background.

But why is this? Can it really be the hardware at fault, given superior filtering is so readily just patched in, as with Devil May Cry? Or could the unified memory setup on PS4 and Xbox One - a unique design which means both CPU and GPU are connected to the same memory resources - be more of a limit here than we might expect? We reach out to several developers for an answer, and the responses are often intriguing. It's a curious state of affairs, bearing in mind that high texture filtering settings on PC barely strain even a low-end GPU. So why do consoles so regularly fall short?

Speaking to Marco Thrush, CTO and owner of Bluepoint Games (known for its excellent Uncharted: the Nathan Drake Collection remaster project), brings us closer to an understanding. Though PS4 and Xbox One bear many overlaps in design with modern PC architecture - more so than earlier console generations - direct comparisons aren't always appropriate. Integrating CPU and GPU into one piece of silicon, then giving both components access to one large pool of memory is an example of how PC tech has been streamlined for its migration into the new wave of consoles. It offers fundamental advantages, but it comes with challenges too.

"The amount of AF [anisotropic filtering] has a big impact on memory throughput," Thrush says. "On PCs, lots of memory bandwidth is usually available because it's fully isolated to the graphics card. On consoles with shared memory architecture, that isn't quite the case, but the benefits you get from having shared memory architecture far outweigh the drawbacks."

Indeed, the advantage of PS4 and Xbox One using an APU (a CPU and GPU on one chip) is clear. It lets both components work on tasks in tandem without having to relay data through another bus interface, which can incur further latency. By having the CPU and graphics processor connected to a common resource, developers can also better take advantage of each part - as and when they're needed.

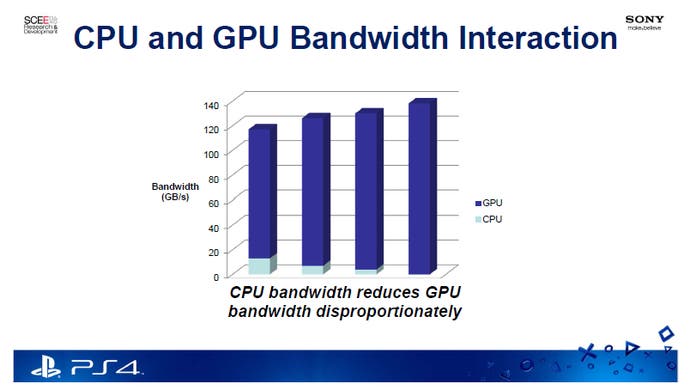

However, the idea that both CPU and GPU end up tussling for bandwidth to this RAM is a drawback - one suggested by an internal Sony development slide, prior to PS4's launch. Even with 8GB of fast GDDR5 RAM in place, the slide shows a correlation between bandwidth occupied by the CPU to its memory, and the impact this has on a GPU's throughput - and vice versa. An investigation into Ubisoft's The Crew in 2013 revealed there's a degree of allocation here; the graphics component has its own, faster 176GB/s memory bus labeled Garlic, while the CPU's Onion has a peak of 20GB/s via its caches. Even so, there's a tug-of-war at play here - a balancing act depending on the game.

It's an idea echoed by Andrzej Poznanski, lead artist on the re-engineered PS4 version of The Vanishing of Ethan Carter. In a recent tech breakdown of the game, it's noted that games that push the CPU may have an impact on high-bandwidth graphics effects, like texture filtering.

"We do use high anisotropic filtering, but to be fair to other developers, we didn't need to spend any cycles on AI or destructible environments or weapon shaders," Poznanski says. "There was an insane amount of work behind our game's visuals, and I don't want to downplay that, but I think we did have more room for visual enhancements than some other games."

Of course, the performance hit incurred by texture filtering is often negligible on modern PCs. It taps into a graphics card's dedicated reserve of RAM, independent of CPU operations, meaning most games can go from blurry bilinear filtering to a sharp, 16x AF at the cost of between 1-2fps. But are the same limitations at play on a PC using a similar APU as console - forcing a machine to rely on a single pool of RAM? We considered this, coming to the conclusion that if the contention between resources was such an issue on console, we should be able replicate it on one of AMD's recent APUs, which are actually significantly less capable than their console counterparts.

To put this to the test, we fire up a PC featuring AMD's A10-7800. With 8GB of DDR3 memory also attached (clocked in this case at 2400MHz). With no other graphics card installed, the CPU and graphics sides of this APU share the same memory bandwidth for intensive games - and the same onion and garlic buses should be vying for system resources. Worse still, if memory bandwidth is the bugbear as we expect - a tussle between these two halves - this machine's RAM should flag it more readily. Its DDR3 modules don't come close to the data throughput possible on console, where PS4 boasts a faster bus to GDDR5, while Xbox One circumvents its slow DDR3 with a separate 32MB cache of ESRAM memory embedded into the APU itself.

Presuming this is indeed the bottleneck, results in games should suffer as we switch texture filtering settings. However, the metrics don't bear this out at all. As you can see in our table below, frame-rates in Tomb Raider and Grand Theft Auto 5 don't drift by much more than 1fps either way, once we move from their lowest filtering settings to full 16x AF. Results are poor generally, even at 1080p with medium settings in each game - leaving us with around 30fps in each benchmark. But crucially, this number doesn't waver based on this variable.

Of course, there is a shift downwards in frame-rate when switching from Tomb Raider's bilinear setting to full 16x AF. Even if it is marginal, it bears out across all games tested too - including the likes of Project Cars and Shadow of Mordor. However, the delta between settings isn't nearly impactful as we'd expect, to justify dropping 8x or 16x AF outright. At these top filtering setting, the gains to image quality is substantial in a 1080p game - producing clearer surfaces that stretch on for metres ahead. It's unlikely that eking such a small percentage of performance would deter developers from such a huge boost to the visual make-up of the game.

It makes a clear point that, if memory bandwidth is the root cause of this on console, it can't yet be emulated with common PC parts. To an extent, the specifics of PS4 and Xbox One's design remain an enigma for now.

| AMD A10 7800 APU/8GB 2400MHz DDR3 | Lowest Filtering Settings | 16x Anisotropic Filtering |

|---|---|---|

| Grand Theft Auto 5, Normal Settings, 1080p | 32.3 (no filtering) | 32.11 |

| Tomb Raider, Low Settings, 1080p | 42.6 (bilinear) | 42.0 |

| Tomb Raider, Medium Settings, 1080p | 30.4 (bilinear) | 29.2 |

| Project Cars, Low Settings, 1080p | 27.0 (trilinear) | 27.0 |

| Shadow of Mordor, Low Settings, 1080p | 27.0 (2x AF) | 26.7 |

Overall, while we now have direct information on why texture filtering is an issue at all on the new wave of consoles, it's fair to say that there are still some outstanding mysteries - not least why Xbox One titles often appear to feature visibly better texture filtering than PS4 equivalents, despite its demonstrably less capable GPU component. One experienced multi-platform developer we spoke to puts forward an interesting theory that resolution is a major factor. This generation has shown PS4 and Xbox One striking 1080p across many titles, but it's often the case that Microsoft's platform resides at a lower number - typically 900p as the relative figure - with Evolve and Project Cars being two recent cases where texture filtering favours Xbox One.

"Generally PS4 titles go for 1080p and Xbox One for 900p," he says. "Anisotropic filtering is very expensive, so it's an easy thing for the PS4 devs to drop (due to the higher resolution) to gain back performance, as very few of them can hold 1080p with AF. Xbox One, as it is running at lower res, can use the extra GPU time to do expensive AF and improve the image."

It's a theory that holds strong in some games, but not in others - such as Tony Hawk's Pro Skater 5, Strider, PayDay 2: Crimewave Edition or even Dishonored: Definitive Edition. In these four cases, both PS4 and Xbox One are matched with a full native 1920x1080 resolution, and yet Xbox One remains ahead in filtering clarity in each game.

Either way, the precise reason why PS4 and Xbox One are still often divided like this is an issue that still eludes full explanation. However, certain points are clearer now; we know unified memory has immense advantages for consoles, but also that bandwidth plays a bigger part in texture filtering quality than anticipated. And while PS4 and Xbox One have a lot in common with PC architecture, their designs are still fundamentally different in ways that haven't yet been fully explained. Responses from developers here have been insightful, but the complete answer remains out of grasp - at least for now.